屏幕后处理(四)

本文主要参考Unity Shader-Ambient Occlusion环境光遮蔽

SSAO

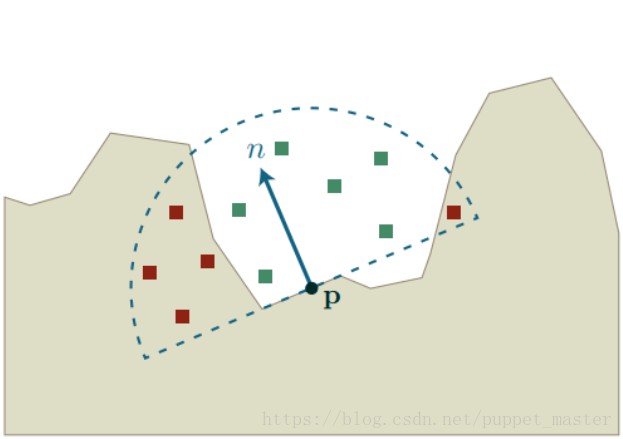

SSAO是通过屏幕空间计算获得的环境光遮蔽,对于最基本的SSAO其主要思路是在像素点的法线方向的半球中随机撒一些采样点,通过深度图来判断这些采样点是否被物体遮挡,被遮挡的点的百分比越大,则AO值越大

核心代码如下,具体实现细节已注释

v2f vert_ao(appdata v)

{

v2f o;

o.vertex = UnityObjectToClipPos(v.vertex);

o.uv = v.uv;

float4 clipPos = float4(v.uv * 2 - 1.0, 1.0, 1.0);

float4 viewRay = mul(_InverseProjectionMatrix, clipPos);

//获得视空间下和该顶点同方向的远裁面坐标

o.viewRay = viewRay.xyz / viewRay.w;

return o;

}

fixed4 frag_ao(v2f i) : SV_Target

{

fixed4 col = tex2D(_MainTex, i.uv);

float linear01Depth;

float3 viewNormal;

float4 cdn = tex2D(_CameraDepthNormalsTexture, i.uv);

DecodeDepthNormal(cdn, linear01Depth, viewNormal);

//根据方向和视空间深度,由相似三角形可以获得视空间的位置

float3 viewPos = linear01Depth * i.viewRay;

viewNormal = normalize(viewNormal);

int sampleCount = _SampleKernelCount;

float oc = 0.0;

UNITY_LOOP

for (int i = 0; i < sampleCount; i++)

{

float3 randomVec = _SampleKernelArray[i].xyz;

//如果随机点的位置与法线反向,那么将随机方向取反,使之保证在法线半球

randomVec = dot(randomVec, viewNormal) < 0 ? -randomVec : randomVec;

//随机点越远,权重越低

float pow = 1 - dot(randomVec, randomVec);

float3 randomPos = viewPos + randomVec * _SampleKeneralRadius;

float3 rclipPos = mul((float3x3)unity_CameraProjection, randomPos);

float3 rscreenPos = (rclipPos.xyz / rclipPos.z) * 0.5 + 0.5;

float randomDepth;

float3 randomNormal;

float4 rcdn = tex2D(_CameraDepthNormalsTexture, rscreenPos.xy);

DecodeDepthNormal(rcdn, randomDepth, randomNormal);

//获得了随机点的视空间01下的深度,需要乘以远裁面才能得到实际深度

randomDepth *= _ProjectionParams.z;

//如果深度差过大则说明是边缘点,深度值无效

float range = pow * smoothstep(0.0, 1.0, _SampleKeneralRadius / abs(randomDepth + randomPos.z));

float ao = (randomDepth + _DepthBias * _ProjectionParams.z) < -randomPos.z ? 1.0 : 0.0;

oc += ao * range;

}

oc /= sampleCount;

oc = max(0.0, 1 - oc * _AOStrength);

col.rgb = oc;

return col;

}

获得AO效果如下

HBAO

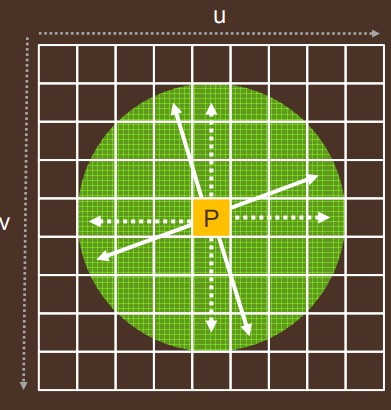

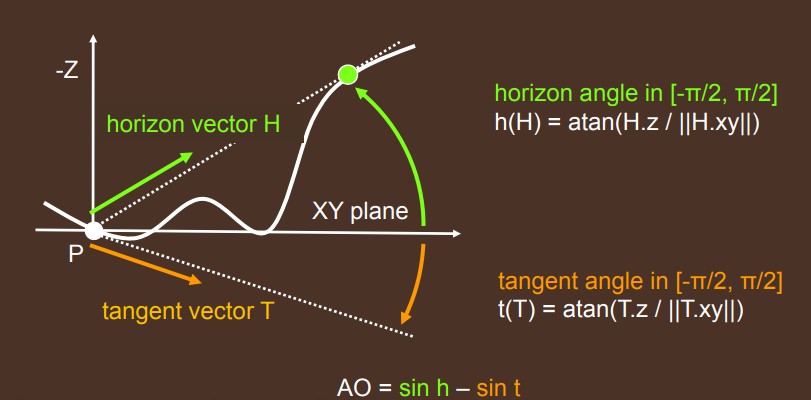

相比于SSAO依据采样点边上的点(视空间)是否被遮挡来计算遮蔽值,HBAO是依据采样点边上的点(屏幕空间)的凸起程度来计算遮蔽值

,原论文PPT,其流程是将UV附近的圆形区域切分为多个方向

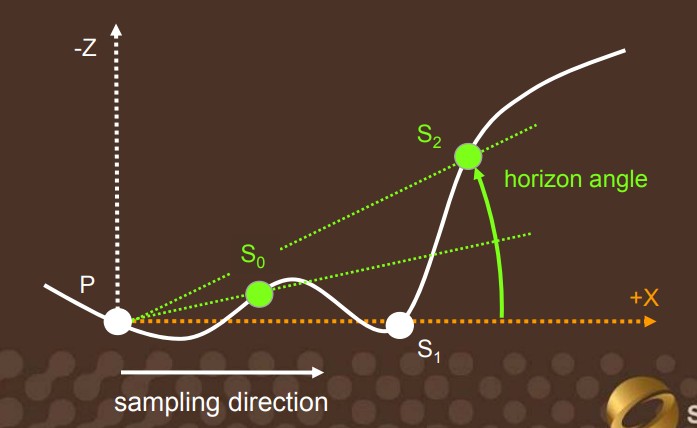

在一个方向上看,根据深度图的深度数值可以获得一个深度变化的线性图

原文中的AO值计算是根据xy屏幕和PS的夹角以及xy屏幕和切线的夹角计算,数值为sinh - sint,这边其实笔者没有理解为啥不是sin(h + t)

一下为简化实现的核心代码

fixed4 frag_ao(v2f i) : SV_Target

{

//1.0/width 1.0/height

float2 InvScreenParams = _ScreenParams.zw - 1.0;

fixed4 col = tex2D(_MainTex, i.uv);

float3 viewPos = ReconstructViewPos(i.uv);

float4 cdn = tex2D(_CameraDepthNormalsTexture, i.uv);

//由于我们uv转viewPost的z值实际是 -z,这边 viewNormal的z值也需要取反

float3 viewNormal = DecodeViewNormalStereo(cdn) * float3(1.0, 1.0, -1.0);

//将遍历圆划分为多块

float rayAngleSize = 2.0 * UNITY_PI / _RayAngleStep;

//每一块都分为多次步进

float rayMarchingStepSize = _SampleKeneralRadius / _RayMarchingStep;

float oc = 0.0;

for (int j = 0; j < _RayAngleStep; j++)

{

float2 rayMarchingDir = GetRayMarchingDir(j * rayAngleSize);

float oldangle = _AngleBiasValue;

//每次步进偏移的像素= 为方向 * 大小 * 单位像素

float2 deltauv = rayMarchingDir * rayMarchingStepSize * InvScreenParams;

UNITY_LOOP

for (int k = 1; k < _RayMarchingStep; k++)

{

float2 uv = k * deltauv + i.uv;

float3 sviewPos = ReconstructViewPos(uv);

//获得像素点到步进点的方向向量即PS

float3 svdir = sviewPos - viewPos;

float len = length(svdir);

//PS和切线的夹角等于 90 - PS和法线的夹角

float angle = UNITY_PI * 0.5 - acos(dot(viewNormal, normalize(svdir)));

if (angle > oldangle)

{

float value = sin(angle) - sin(oldangle);

//防止边缘点记录AO

float range = len < _SampleKeneralRadius ? 1.0 : 0.0;

//越远AO权重越低,防止颜色突变,效果更真实

float atten = Falloff2(k, _RayMarchingStep) * range;

oc += value * atten;

oldangle = angle;

}

}

}

//最后AO等于每个方向的AO的均值

oc *= 1.0 / (_RayAngleStep)*_AOStrength;

oc = 1.0 - oc;

col.rgb = oc;

return col;

}

//依据uv获得ViewPos

float3 ReconstructViewPos(float2 uv)

{

float3x3 proj = (float3x3)unity_CameraProjection;

float2 p11_22 = float2(unity_CameraProjection._11, unity_CameraProjection._22);

float2 p13_31 = float2(unity_CameraProjection._13, unity_CameraProjection._23);

float depth;

float3 viewNormal;

float4 cdn = tex2D(_CameraDepthNormalsTexture, uv);

DecodeDepthNormal(cdn, depth, viewNormal);

depth *= _ProjectionParams.z;

//这边是依据视空间的z值和uv值反推viewPos

//根据变换公式 uv.x = (p11 * v.x / (-z) + 1) * 1/2 ,uv.y = (p22 * v.y / (-z) + 1) * 1/2

//反推得 v.x = (uv.x * 2 - 1 ) / p11 * (-z) , v.y = (uv.y * 2 - 1 ) / p11 * (-z)

//即 v.xy = u(v * 2 - 1) / p11_22 * depth (这边depth = -z)

//至于这边为何还要减去p13_31,笔者也不是很清楚,当时Unity后处理中的原函数就是这么写的,猜测是偏移值,如果没有偏移是(0,0)

return float3((uv * 2.0 - 1.0 - p13_31) / p11_22 * (depth), depth);

}

效果如下

双边滤波算法

博客中介绍了一种双边滤波算法用于去噪,相较于高斯模糊,双边过滤会在考虑距离的权重意外额外加入一个权重,这个权重可以是颜色、法线等,让颜色差和法线方向差异较大的像素在最终像素的占比权值很低,从而达到保留边缘的效果,代码如下

1 | float3 GetNormal(float2 uv) |

All articles in this blog are licensed under CC BY-NC-SA 4.0 unless stating additionally.